by

Assessment of faculty development programs is crucial in providing feedback on performance with the intent of consistently improving the program as a whole. In many situations, the expedient fix becomes the norm of assessment. If something is not working, fix it right away. This can result in overall chaos of back and forth changes, with questionable changes that may not be relevant. It is imperative to use a more structured approach to evaluate the overall effectiveness of the faculty development offering. The best way to gauge how to improve the course experience is to evaluate its effectiveness from multiple perspectives by reviewing primary components, such as logistics, technologies, and curriculum strategies.

Program assessment can include several components or methods to ensure the efficacy of the process and resulting improvements. The components used for the University of Central Florida’s IDL6543 program include faculty surveys, formative evaluations, and an Annual Review Committee. By reviewing the program in this format, outcomes can be assessed, findings can be analyzed, and actions can be taken for enhancements.

Faculty Surveys

The most common method of assessing the effectiveness of the training program is through surveys. They can be distributed to faculty and other stakeholders, and analyzed as a group.

The most common method of assessing the effectiveness of the training program is through surveys. They can be distributed to faculty and other stakeholders, and analyzed as a group.

In IDL6543, surveys are distributed after each face-to-face session, and participants are asked about their level of satisfaction with the course content, class sessions, and consultations, among others. These surveys are online (convenient), anonymous (to encourage honesty), and optional. A final survey is required, where high-level questions are asked. These are used to improve the program before it

is taught again.

The advantage of surveys is that you can collect a lot of data at once and evaluate quickly. However, it is important to keep in mind that you might not hear the entire voice from the body of learners. For this reason, it is always advised to use a number of methods before making sweeping changes.

Evaluations

Formative evaluations

Each term, a new set of eyes examines the logistical components of the course. The content in the weekly online modules should be checked for broken links and other minor content corrections (missing word, date change, etc.). Minor content additions and deletions can also occur in this process.

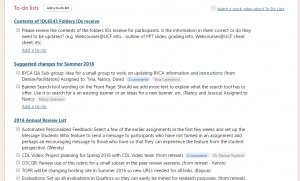

As a whole, though, any moderate or major content changes are withheld until the Annual Review Committee meets. At UCF, items that will be reviewed are submitted within a team project management tool throughout the

year (below).

To-Do Items using Project Management Tool

Another logistical aspect of formative evaluations is reviewing processes and procedures. The IDL6543 Project Manager and Administrative Assistant review current methods of delivery for improvement. This includes updating tasks lists for new processes that may occur during the delivery of the faculty development program. The IDL6543 Facilitator (ID) will also peruse a checklist to address items as they occur during the class. Weekly debriefs for the instructional design team also help to bring any relevant issues to the forefront.

Summative Evaluations

Annual Review Committee

It is helpful to organize a committee, which will review the training course at least once per year, as things will change and need to be addressed and updated. The IDL6543 Annual Review Committee meets at the end of summer term to review the ongoing notes kept from debriefs of each delivery of IDL6543. This group begins by conducting a high level examination of the program and focusing down to the minute details that affect the outcomes. These include:

- Logistics – How did the procedures work? Are there processes that need to be changed to streamline the delivery of the course?

- Technology – Were there any issues with the technology–online or face-to-face? Is there an emerging technology that would benefit the faculty development course?

- Pedagogy – How are the teaching strategies working? Are there new models or frameworks that would be beneficial for the faculty to know?

As mentioned previously, a list of possible improvements is kept by the instructional design team throughout the year. Each item is reviewed for relevance, pertinence, and ease of implementation. Changes are made, further research is done, and items are adjusted as needed.

The advantage of this oversight committee is the direct, purposeful implementation of revisions rather than an ad hoc approach; however, it may seem as though changes could occur too slowly with this method. It is best to consider that if a change is warranted for a quicker deployment, it can be brought up in a debrief meeting.

Research

Conducting research is also advised to systematically explore the effectiveness of program changes. For instance, at UCF, the format of IDL6543 changed from face-to-face to blended. Survey data was collected and analyzed from two face-to-face versions and two blended versions to assess satisfaction with the course and perceptions about the faculty development program. It was found that those in the blended format experienced higher overall satisfaction and expressed stronger perceptions about their ability to develop online courses (deNoyelles, Cobb, & Lowe, 2012).

Conclusion

“Formative and summative evaluation of the faculty development education session for online education should be continually conducted. In addition, online researchers should focus their research questions on the effectiveness of the faculty development education sessions in implementing efficient online education” ( Ali, Hodson-Carlton, Ryan, Flowers, Rose, et al., 2005).

Fostering continuous improvement through ongoing assessment is critical for the success of an online training program. There are many methods that can be used to conduct this assessment. In this example, there are reliable measures to prevent major issues from occurring that ultimately would negatively influence the effectiveness of the program. Surveys are always a suitable place to begin. Gaining feedback from the faculty themselves is crucial. Formative (weekly debriefs, checklist reviews, content checks, etc.) and summative (Annual Review Committee) measures set the stage for ensuring efficacious results in program effectiveness.

Sample Course

The sample course includes links to the surveys which can be distributed to participants in order to collect feedback.

References

Ali, N., Hodson-Carlton, K., Ryan, M., Flowers, J., et al. Online Education: Needs Assessment for Faculty Development. The Journal of Continuing Education in Nursing; Jan/Feb 2005; 36, 1; CINAHL – Database of Nursing and Allied Health Literature, pg. 32.

http://search.proquest.com/docview/223314007?pq-origsite=gscholar

deNoyelles, A., Cobb, C., & Lowe, D. (2012). Influence of reduced seat time on satisfaction and perception of course development goals: A case study in faculty development. Journal of Asynchronous Online Learning Networks, 16 (2), 85-98.